一、问题:企业内存飙高,程序经常卡死

企业运维定位需要经常清除缓存,清理RAM缓存和交换空间的命令行示例:

执行:echo 3 > /proc/sys/vm/drop_caches && swapoff -a && swapon -a && printf 'n%sn' 'Ram-cache and Swap Cleared'

二、排查过程

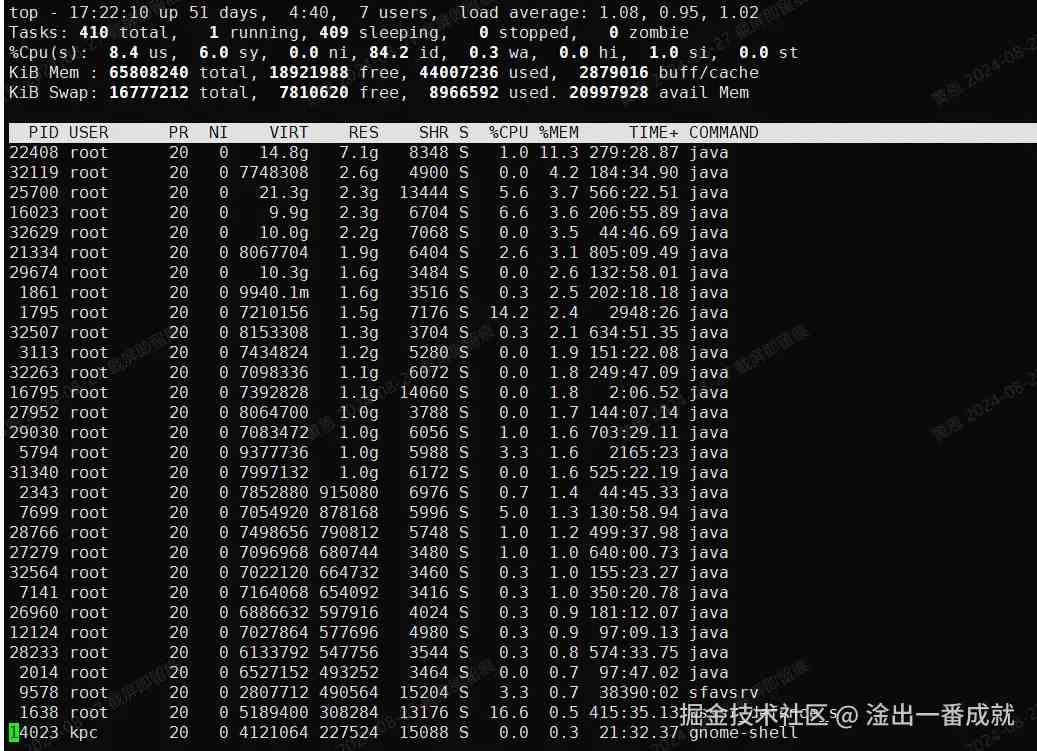

2-1、 看下系统占用情况按内存排序

top 然后点下 Shift + M 键

2-2、 获取到实际占用内存的进程

ps -ef | grep

pid为进程id,上一步top的第一列

[root@fpserver1 ~]# ps -ef | grep 22408 root 14975 13128 0 17:24 pts/4 00:00:00 grep --color=auto 22408 root 22408 1 1 8月13 ? 04:39:30 java -server -Xmx8192m -Xms8192m -XX:+PrintGCDetails -Xloggc:/data/logs/xx-service_gc_log.out -XX:+PrintGCDateStamps -jar -Djava.io.tmpdir=/data/dkh-xx-service-dkh-2/tmpdir -DMODE= -DDOMAIN=xx.xx.com -DAPP_HOME=/data -DAPP_NAME=xx-service -DINNER_IP=10.101.2.42 -DEUREKA_DOMAIN=http://registerserver-xx.com:8889/eureka -DDEPLOY_SERVICE_IP=INNER_IP -Djava.security.egd=file:/dev/./urandom xx-service.jar --server.port=9123 --eureka.client.serviceUrl.defaultZone=http://xxx:8889/eureka,http://xxx:8889/eureka --spring.profiles.active=dkh [root@fpserver1 ~]# ps -ef | grep 25700 root 25700 1 0 7月12 ? 09:27:04 //bin/java -Djava.util.logging.config.file=/data/tomcat_solr/conf/logging.properties -Djava.util.logging.manager=org.apache.juli.ClassLoaderLogManager -Djdk.tls.ephemeralDHKeySize=2048 -Djava.protocol.handler.pkgs=org.apache.catalina.webresources -Dorg.apache.catalina.security.SecurityListener.UMASK=0027 -Dignore.endorsed.dirs= -classpath /data/tomcat_solr/bin/bootstrap.jar:/data/tomcat_solr/bin/tomcat-juli.jar -Dcatalina.base=/data/tomcat_solr -Dcatalina.home=/data/tomcat_solr -Djava.io.tmpdir=/data/tomcat_solr/temp org.apache.catalina.startup.Bootstrap start root 31449 13128 0 17:39 pts/4 00:00:00 grep --color=auto 25700

xx-service 服务可以看到Xms8192m,top中RES为7.1g,占用正常

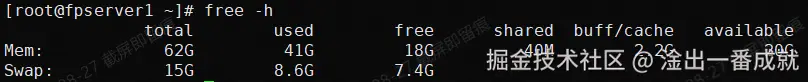

2-3、 内存情况:

free -h

发现buff/cache 占用很高,而且清除后还会持续上升,企业运维定位清除缓存就是清除buffer/cache的数据

注:在 Linux 系统中,buff/cache 是指系统内存中用于缓存文件和缓冲区的部分,并不是直接由单个进程占用的。它是系统内核管理的资源,用于提升文件系统的性能和减少磁盘 I/O。

使用hcache排查buffer/cache 过高是由那几个进程引起

1、hcache的下载地址

github地址:https://github.com/silenceshell/hcache

2、 hcache的使用方式

当前下载完对应的hcache后,则直接是一个对应的 bin文件,此时直接将对应的bin文件进行 chmod 授权后即可使用。将该bin文件设置为可执行文件

chmod 755 hcache

(可选)将该hcache移动到usr的bin目录中,使其可以被全局调用该命令

mv hcache /usr/local/bin/

3、 使用hcache查看buffer/cache的使用情况

[root@fpserver1 kpc]# ./hcache -top 10 +-------------------------------------------------------------------------------------------------------------------------------------+----------------+------------+-----------+---------+ | Name | Size (bytes) | Pages | Cached | Percent | |-------------------------------------------------------------------------------------------------------------------------------------+----------------+------------+-----------+---------| | /data/xxx/goldenbooksdk/libgoldenbooksdk_parser.so | 76748866 | 18738 | 18738 | 100.000 | | /run/log/journal/60f9704648984835bdf7aacaa672545b/system@845d4f795c124ae395dae474a5bf383c-0000000000053dee-00061de26ef5d494.journal | 67108864 | 16384 | 16384 | 100.000 | | /run/log/journal/60f9704648984835bdf7aacaa672545b/system@845d4f795c124ae395dae474a5bf383c-0000000000038493-00061d6c1149190a.journal | 67108864 | 16384 | 16384 | 100.000 | | /run/log/journal/60f9704648984835bdf7aacaa672545b/system@845d4f795c124ae395dae474a5bf383c-0000000000000001-00061c8ccc75efd1.journal | 67108864 | 16384 | 16384 | 100.000 | | /run/log/journal/60f9704648984835bdf7aacaa672545b/system@845d4f795c124ae395dae474a5bf383c-000000000000dfed-00061cc23feafba1.journal | 67108864 | 16384 | 16384 | 100.000 | | /run/log/journal/60f9704648984835bdf7aacaa672545b/system@845d4f795c124ae395dae474a5bf383c-00000000000a7c76-00061f453289495e.journal | 67108864 | 16384 | 16384 | 100.000 | | /run/log/journal/60f9704648984835bdf7aacaa672545b/system@845d4f795c124ae395dae474a5bf383c-000000000008b819-00061ecf996ae19f.journal | 67108864 | 16384 | 16384 | 100.000 | | /run/log/journal/60f9704648984835bdf7aacaa672545b/system@845d4f795c124ae395dae474a5bf383c-00000000000c3a08-00061fbba240fa34.journal | 67108864 | 16384 | 16384 | 100.000 | | /run/log/journal/60f9704648984835bdf7aacaa672545b/system@845d4f795c124ae395dae474a5bf383c-0000000000099ba7-00061f0a53131362.journal | 67108864 | 16384 | 16384 | 100.000 | | /run/log/journal/60f9704648984835bdf7aacaa672545b/system@845d4f795c124ae395dae474a5bf383c-0000000000061b9e-00061e1df90b2163.journal | 67108864 | 16384 | 16384 | 100.000 | +-------------------------------------------------------------------------------------------------------------------------------------+----------------+------------+-----------+---------+

3-1、清除缓存后使用情况

[root@fpserver1 kpc]# ./hcache -top 10 +-------------------------------------------------------------------------------------------------------------------------------------+----------------+------------+-----------+---------+ | Name | Size (bytes) | Pages | Cached | Percent | |-------------------------------------------------------------------------------------------------------------------------------------+----------------+------------+-----------+---------| | /run/log/journal/60f9704648984835bdf7aacaa672545b/system@845d4f795c124ae395dae474a5bf383c-000000000001bed8-00061cfb50fc93b2.journal | 67108864 | 16384 | 16384 | 100.000 | | /run/log/journal/60f9704648984835bdf7aacaa672545b/system@845d4f795c124ae395dae474a5bf383c-0000000000053dee-00061de26ef5d494.journal | 67108864 | 16384 | 16384 | 100.000 | | /run/log/journal/60f9704648984835bdf7aacaa672545b/system@845d4f795c124ae395dae474a5bf383c-0000000000038493-00061d6c1149190a.journal | 67108864 | 16384 | 16384 | 100.000 | | /run/log/journal/60f9704648984835bdf7aacaa672545b/system@845d4f795c124ae395dae474a5bf383c-0000000000000001-00061c8ccc75efd1.journal | 67108864 | 16384 | 16384 | 100.000 | | /run/log/journal/60f9704648984835bdf7aacaa672545b/system@845d4f795c124ae395dae474a5bf383c-000000000004606f-00061da6f6ba3df3.journal | 67108864 | 16384 | 16384 | 100.000 | | /run/log/journal/60f9704648984835bdf7aacaa672545b/system@845d4f795c124ae395dae474a5bf383c-000000000000dfed-00061cc23feafba1.journal | 67108864 | 16384 | 16384 | 100.000 | | /run/log/journal/60f9704648984835bdf7aacaa672545b/system@845d4f795c124ae395dae474a5bf383c-00000000000a7c76-00061f453289495e.journal | 67108864 | 16384 | 16384 | 100.000 | | /run/log/journal/60f9704648984835bdf7aacaa672545b/system@845d4f795c124ae395dae474a5bf383c-000000000007da61-00061e94285f61a3.journal | 67108864 | 16384 | 16384 | 100.000 | | /run/log/journal/60f9704648984835bdf7aacaa672545b/system@845d4f795c124ae395dae474a5bf383c-000000000006fc6c-00061e58f9507585.journal | 67108864 | 16384 | 16384 | 100.000 | | /run/log/journal/60f9704648984835bdf7aacaa672545b/system@845d4f795c124ae395dae474a5bf383c-000000000002a0b3-00061d33e16097b9.journal | 67108864 | 16384 | 16384 | 100.000 | +-------------------------------------------------------------------------------------------------------------------------------------+----------------+------------+-----------+---------+

4、 获取高占用的进程信息

可以看到/run/log/journal的文件总是占用共享内存

[root@fpserver1 kpc]# lsof /data/xxx/goldenbooksdk/libgoldenbooksdk_parser.so

lsof: WARNING: can't stat() fuse.gvfsd-fuse file system /run/user/1000/gvfs

Output information may be incomplete.

COMMAND PID USER FD TYPE DEVICE SIZE/OFF NODE NAME

java 22408 root mem REG 253,0 76748866 270080875 /data/xxx/goldenbooksdk/libgoldenbooksdk_parser.so

root@fpserver1 kpc]# lsof /run/log/journal/60f9704648984835bdf7aacaa672545b/system@845d4f795c124ae395dae474a5bf383c-000000000001bed8-00061cfb50fc93b2.journal

lsof: WARNING: can't stat() fuse.gvfsd-fuse file system /run/user/1000/gvfs

Output information may be incomplete.

COMMAND PID USER FD TYPE DEVICE SIZE/OFF NODE NAME

rsyslogd 8400 root mem REG 0,20 67108864 191144463 /run/log/journal/60f9704648984835bdf7aacaa672545b/system@845d4f795c124ae395dae474a5bf383c-000000000001bed8-00061cfb50fc93b2.journal

rsyslogd 8400 root 26r REG 0,20 67108864 191144463 /run/log/journal/60f9704648984835bdf7aacaa672545b/system@845d4f795c124ae395dae474a5bf383c-000000000001bed8-00061cfb50fc93b2.journal

通过lsof命令识别占用大量共享内存的进程。例如,rsyslogd服务可能占用大量内存,需要进一步排查。那下面就排查下rsyslogd占用系统内存的问题

三、 总结

文章强调了运维人员在处理系统性能问题时,需要对内存和缓存进行有效管理。通过使用命令行工具和特定的系统命令,可以识别和解决内存占用过高的问题。同时,介绍了hcache这一工具,帮助运维人员更精确地定位和分析内存使用情况。

以上就是Linux系统rsyslogd占用内存过高的问题排查及解决的详细内容,更多关于Linux rsyslogd占用内存过高的资料请关注IT俱乐部其它相关文章!